This project demonstrates full observability (logs, traces, and metrics) in a Python (Flask) application using OpenTelemetry, Elastic APM, and the OpenTelemetry Collector. We show how to instrument a simple app manually, export telemetry using OTLP gRPC, and ship all data to Elastic Cloud.

A small Flask app with:

- ✅ Manual tracing using OpenTelemetry SDK

- ✅ Manual metrics instrumentation

- ✅ Manual logging with OpenTelemetry context enrichment

- ✅ Logs exported via OTLP to OpenTelemetry Collector

- ✅ Collector exports all telemetry to Elastic APM

How Data Flows

[Flask App]

│

├── Traces --> Manual instrumentation using OpenTelemetry SDK

│ → OTLP gRPC Exporter → otel-collector → Elastic APM (Traces)

│

├── Metrics --> Manual instrumentation using OTEL SDK (create_counter)

│ → PeriodicExportingMetricReader → otel-collector → Elastic APM (Metrics)

│

└── Logs --> Standard Python logging + OTEL enrichment

→ OTLP gRPC via LoggingOTLPHandler → otel-collector → Elastic APM (Logs)Everything is shipped from the app to otel-collector over gRPC (port 4317). The collector forwards it securely to Elastic Cloud using an APM Endpoint and secret token.

Instrumentation Strategy

- Traces: Manually instrumented using OpenTelemetry’s

Tracerto track requests across Flask routes and background tasks. - Metrics: A custom request counter is implemented via

Meterandcreate_counterfor tracking throughput. - Logs: Structured logs are enriched with OTEL context (e.g., trace IDs) and exported via the

LoggingOTLPHandler.

This hybrid approach ensures observability while maintaining control over instrumentation costs.

Project Structure

project-root/

├── app/

│ ├── main.py # Flask app with OTEL SDK

│ └── requirements.txt # Python deps

├── collector-config.yaml # OpenTelemetry Collector config

└── docker-compose.yml # Container setupCode Highlights

# Manual Tracing + Metrics + Logging

trace.set_tracer_provider(TracerProvider(resource=resource))

tracer = trace.get_tracer(__name__)

meter = metrics.get_meter(__name__)

logger = logging.getLogger("flask-app")

logger.addHandler(LoggingOTLPHandler(endpoint="http://otel-collector:4317", insecure=True))

logger = logging.LoggerAdapter(logger, extra={"custom": "value"})Background thread for traces/metrics

def generate_traces_and_metrics():

while True:

with tracer.start_as_current_span("background_transaction") as span:

request_counter.add(1, {"endpoint": "/background"})

logger.info("Background tick", extra={"otel_span_id": span.get_span_context().span_id})

time.sleep(2)OpenTelemetry Collector Config (collector-config.yaml)

receivers:

otlp:

protocols:

grpc:

http:

exporters:

debug:

otlp/elastic:

endpoint: "https://<your-apm>.elastic.co:443"

headers:

Authorization: "Bearer <your-token>"

service:

pipelines:

traces:

receivers: [otlp]

exporters: [debug, otlp/elastic]

metrics:

receivers: [otlp]

exporters: [debug, otlp/elastic]

logs:

receivers: [otlp]

exporters: [debug, otlp/elastic]Docker Compose

services:

flask-app:

build: ./app

ports:

- "8085:8085"

environment:

- OTEL_EXPORTER_OTLP_ENDPOINT=http://otel-collector:4317

depends_on:

- otel-collector

otel-collector:

image: otel/opentelemetry-collector-contrib:latest

volumes:

- ./collector-config.yaml:/etc/otelcol/config.yaml

ports:

- "4317:4317" # OTLP gRPC

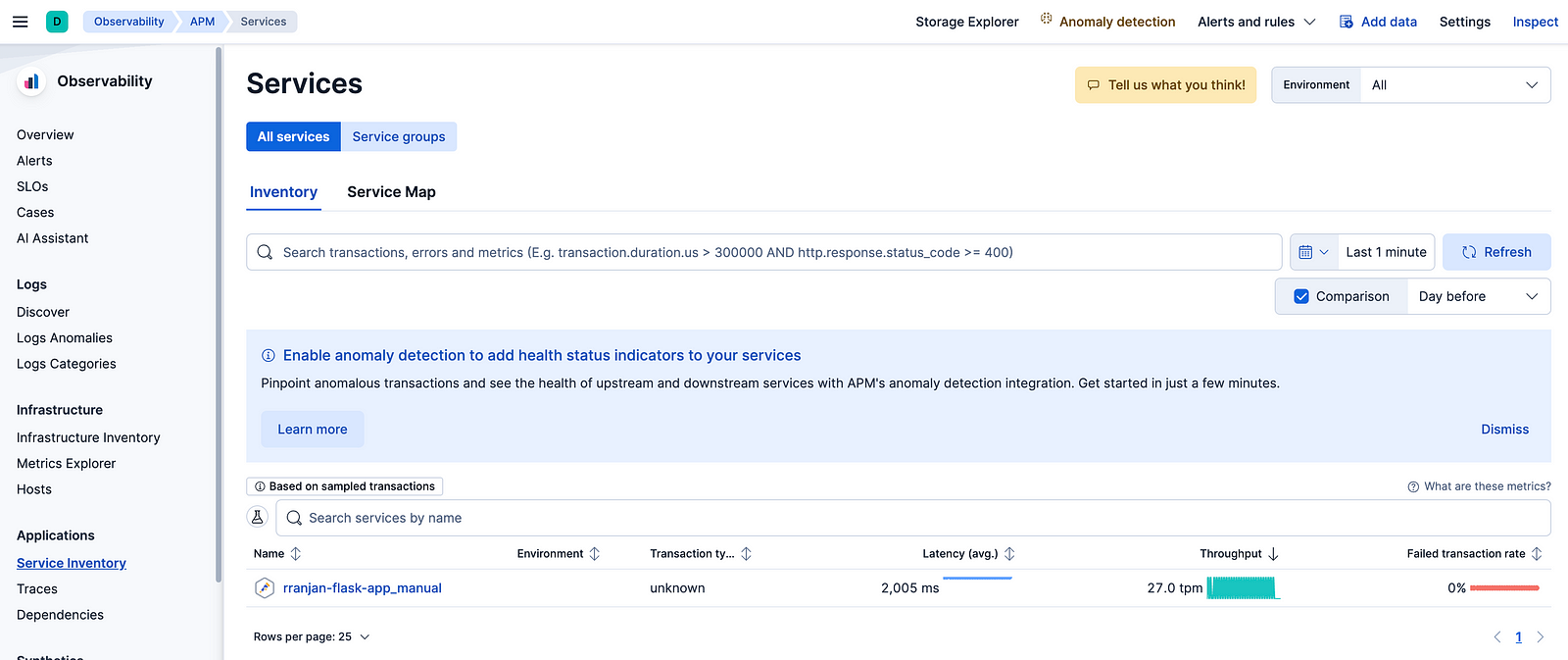

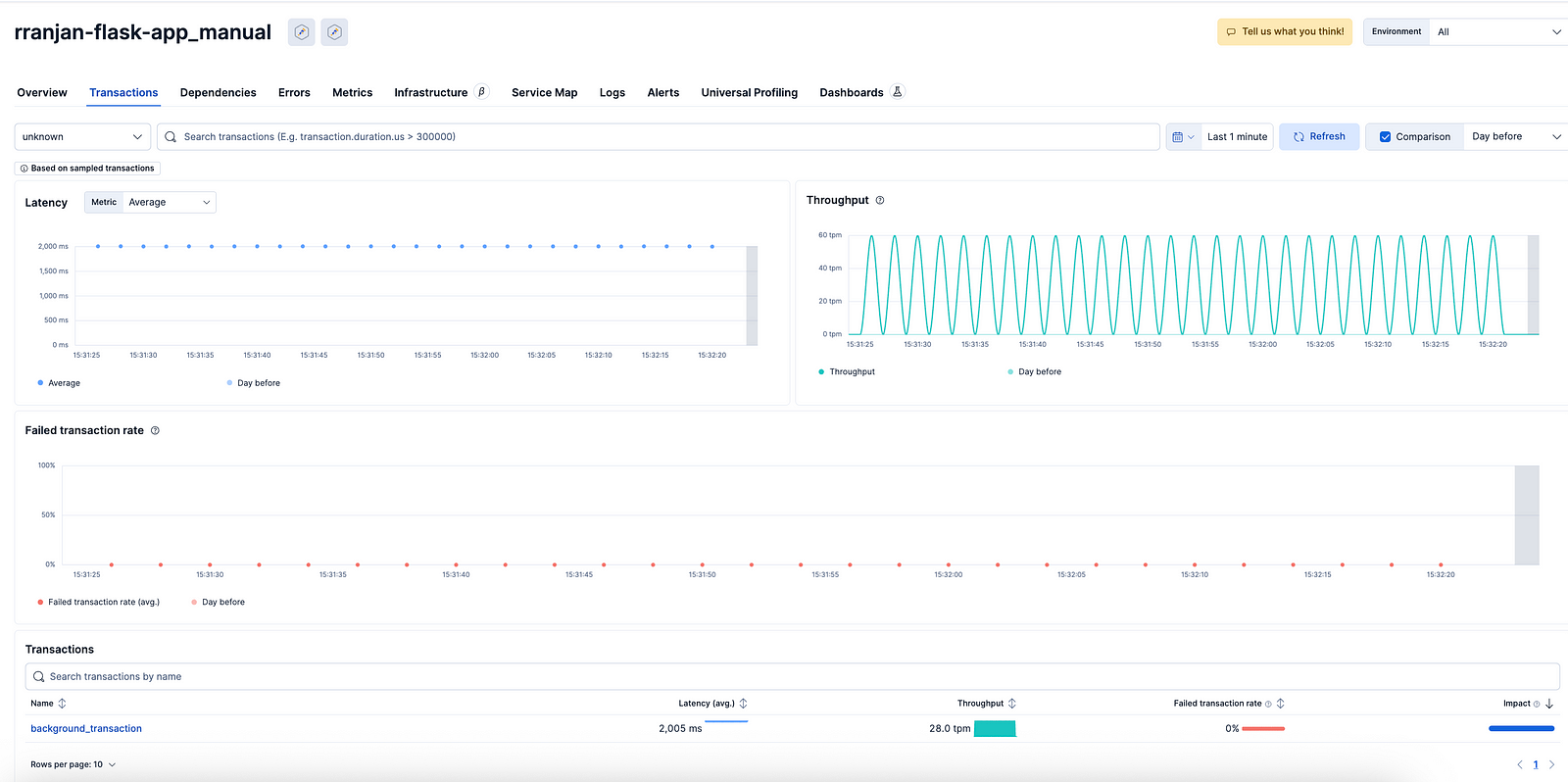

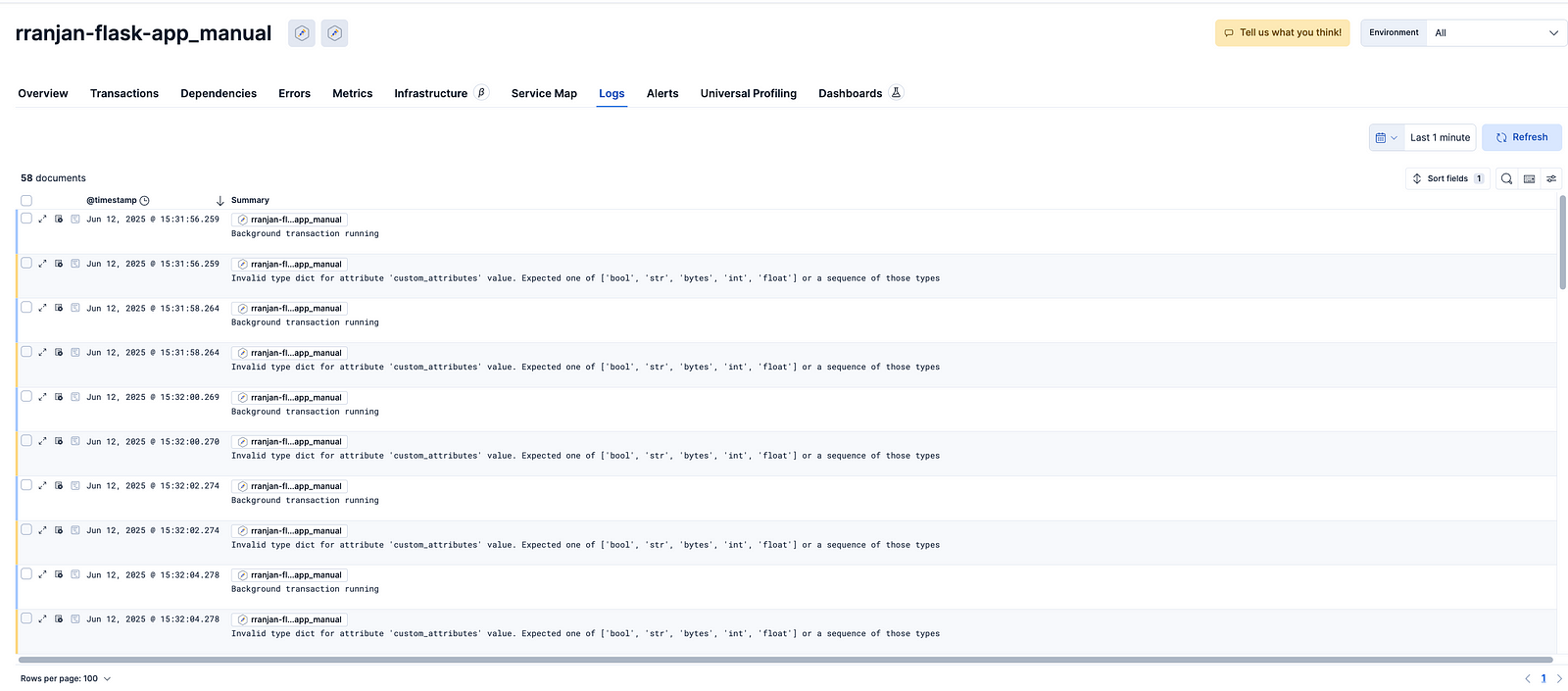

- "4318:4318" # OTLP HTTPVisualizing in Kibana (Elastic APM)

Once ingested:

- Traces show span durations, service map, dependency flow

- Metrics show counters like request rates per endpoint

- Logs include enriched fields (trace_id, span_id, severity, endpoint)

How to Test All Endpoints

# Root

curl http://localhost:8085/

# Upload endpoint

curl http://localhost:8085/upload

# Process endpoint

curl http://localhost:8085/process

# Report endpoint

curl http://localhost:8085/report

# Batch job

curl http://localhost:8085/batch-job

# Simulate error (for APM alerting)

curl http://localhost:8085/simulate-error

Each route creates:

- a trace with custom labels (

job,status,env,region, etc.) - a metric counter

- a log line with

trace_idandspan_idfor correlation

Troubleshooting Tips

StatusCode.UNAVAILABLE on exporter

- Cause: OTEL Collector not ready

- Fix: Ensure

otel-collectoris started before app - Used “ in docker-compose

logging exporter deprecated

- Error: Collector fails with

logging exporter is deprecated - Fix: Switched to

debugexporter

gRPC localhost binding in the collector

- Issue: The Collector used

localhost:4317, not accessible from other containers - Fix: Change the endpoint to just

4317to bind to all interfaces

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317 # <- changed from localhost

http:

endpoint: 0.0.0.0:4318 # <- changed from localhostSummary

This setup gives you a ready-to-deploy observability stack:

- Manual instrumentation with OpenTelemetry in Python

- The collector routes telemetry to Elastic.

- Logs, metrics, and traces correlated via trace/span IDs

- All data is accessible and visualizable in Elastic APM

The code will be uploaded to my GitHub.

Reach out on LinkedIn for any questions or

Leave a comment